🚀 From Code to Kubernetes: Deploying Microservices at Scale! 🚀

From Code to Kubernetes: The Journey of Seeing the Bigger Picture

When I started my journey in software development, I believed the most critical part of the process was writing clean, efficient code. But as I moved through different stages — building pipelines, managing deployments, and architecting systems — I learned that there’s so much more involved in delivering applications that scale and remain resilient. The real challenge is ensuring all the pieces work together seamlessly, from writing code to running it in production at scale.

Here’s how I mapped out the entire picture, step by step, to show how each layer of the application lifecycle contributes to the bigger picture.

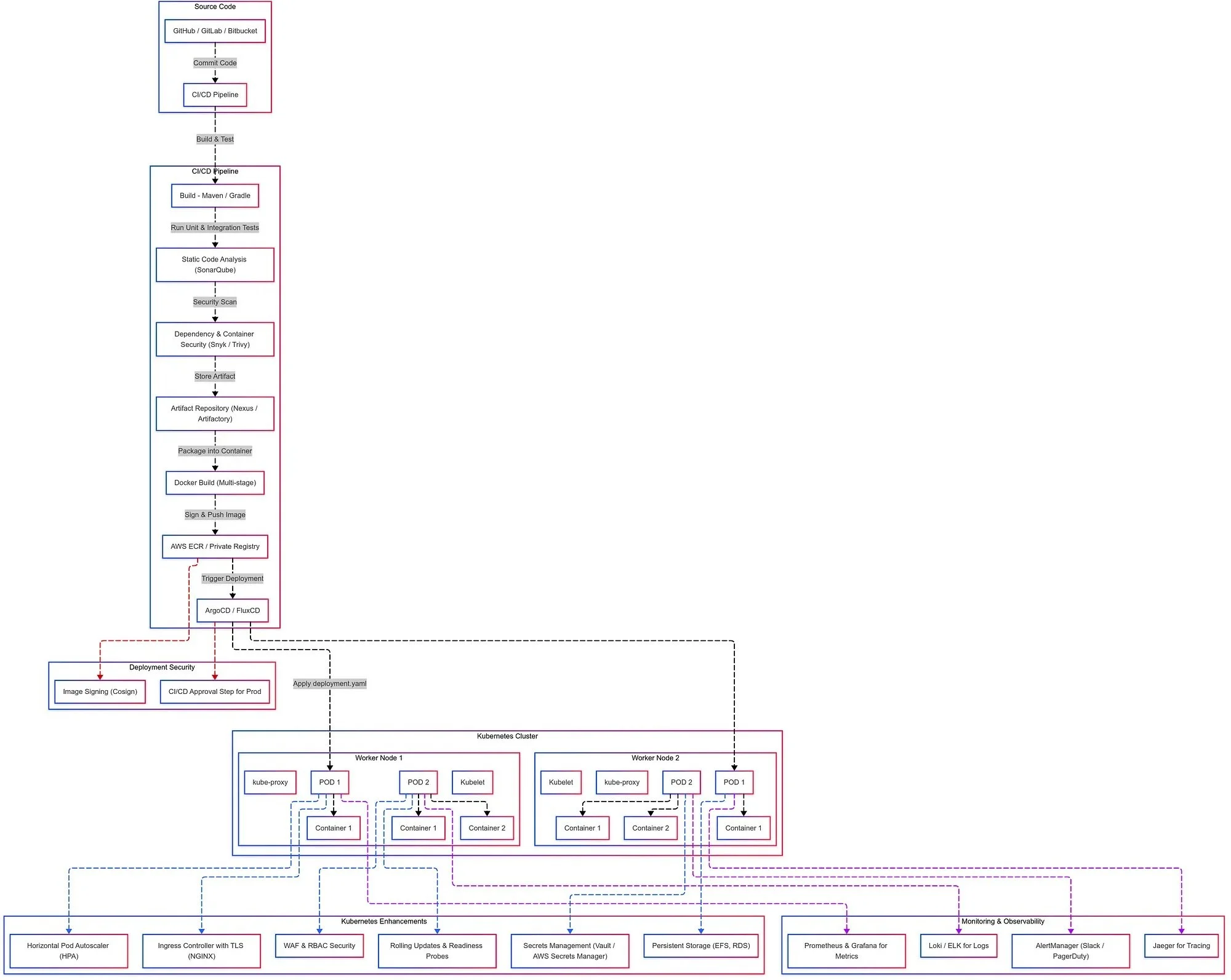

A structured flowchart illustrating the journey from code development to Kubernetes deployment. The diagram includes five key stages: Code Development (IDE, Git, Version Control), CI/CD Pipeline (Testing, Build, Integration), Containerization (Docker, Image Repository), Orchestration (Kubernetes, Scaling, Monitoring), and Production Deployment (Cloud, Resilience, Observability). Each stage is visually represented with icons and arrows connecting them, highlighting the seamless progression of the software lifecycle.

1. Build Layer: Crafting the Foundation

Code Commit and Build

At the core of every application is the code. But before it can run in production, it needs to be properly built and packaged for deployment.

🔹 Focus areas in the build process:

Modular Code: Code needs to be clean, maintainable, and easily testable. Each piece of functionality should be in its own module or service, especially when building with microservices.

Unit and Integration Testing: Ensuring that each piece of code works as expected before it moves to the next phase. Automated testing catches bugs early, reducing the cost of fixing them later.

Code Quality Tools: Using static analysis tools like SonarQube to maintain code quality, ensuring the code is both performant and secure.

💡 Key takeaway: Building a strong foundation starts with writing high-quality code that is modular, testable, and optimized for performance. A single bug in this layer can have downstream effects, so it’s critical to get this part right.

2. Continuous Integration (CI) and Continuous Delivery (CD) Layer: Automating for Speed and Quality

Once the code is written, the next step is automating the build, testing, and deployment process. CI/CD pipelines are at the heart of speeding up development and ensuring software is delivered quickly and safely.

CI/CD Pipeline

🔹 Key responsibilities in the CI/CD layer:

CI Pipelines: Automating the process of compiling, testing, and building the code with tools like Jenkins, GitHub Actions, or GitLab CI. These tools help catch errors early and allow developers to integrate their code changes continuously.

CD Pipelines: Automating the deployment process with tools like ArgoCD, FluxCD, or Jenkins X. This ensures that code is deployed seamlessly, from testing environments to production, with little manual intervention.

Artifact Repositories: Storing build artifacts like Docker images or JAR/WAR files in repositories such as Nexus or Artifactory, ensuring the right versions of dependencies are used in deployments.

Security & Compliance: Including steps in the pipeline to check for security vulnerabilities with tools like Snyk, Trivy, or OWASP Dependency-Check, ensuring only safe code is deployed to production.

Application Code Deployment Security

💡 Key takeaway: Automation in the CI/CD layer is what ensures fast, repeatable, and consistent delivery. The goal here is to deliver features quickly, without sacrificing security or stability.

3. Deployment Layer: Running Services at Scale

Once code is built and tested, it needs to be deployed in a way that’s scalable, resilient, and manageable. This is where containers and orchestration tools like Kubernetes come into play.

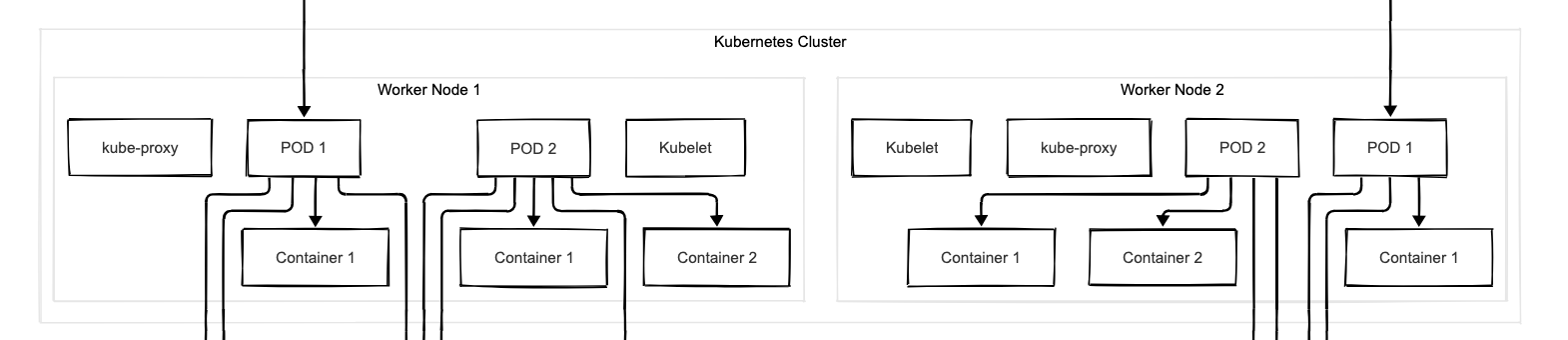

Kubernetes Cluster Orchestration

🔹 Key responsibilities in the deployment layer:

Containerization: Using Docker to package the application into containers, ensuring it runs consistently across different environments. Each service in a microservices architecture is packaged into its own container for scalability.

Kubernetes for Orchestration: Managing and scaling containerized applications using Kubernetes. Kubernetes takes care of deploying, scaling, and maintaining containers by managing clusters of nodes, ensuring that the system remains resilient and self-healing.

Helm Charts: Using Helm to define, install, and upgrade Kubernetes applications. Helm charts simplify Kubernetes deployments by providing a templated approach to managing Kubernetes resources.

Service Discovery: Enabling communication between microservices in a dynamic environment using Kubernetes DNS or service discovery tools like Consul.

💡 Key takeaway: A well-structured deployment layer ensures that microservices are containerized, orchestrated efficiently, and able to scale to meet demand. Kubernetes acts as the backbone of this process.

4. Observability Layer: Ensuring Production Stability

Once services are deployed, it’s essential to continuously monitor and observe the system to ensure it’s running smoothly. In a microservices architecture, observability is critical to understanding the health of the system.

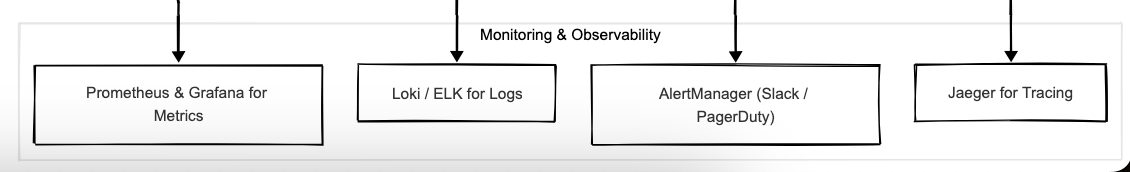

Application Monitoring & Observability

🔹 Key responsibilities in the observability layer:

Centralized Logging: Aggregating logs from different services using tools like ELK Stack (Elasticsearch, Logstash, and Kibana) or Loki helps trace issues across distributed services.

Metrics and Monitoring: Using Prometheus and Grafana to collect and visualize performance data, ensuring that the system is functioning within expected parameters.

Distributed Tracing: Implementing tools like Jaeger or Zipkin to trace requests across multiple services, helping diagnose performance bottlenecks and pinpoint failures in a microservices environment.

Alerting and Incident Response: Integrating Slack, PagerDuty, or Opsgenie to notify teams of critical issues in real-time, ensuring rapid response to any system failures.

💡 Key takeaway: Observability is essential for diagnosing and fixing problems quickly. In a microservices environment, understanding how services interact and monitoring their health is crucial to ensuring stability and performance.

5. Security Layer: Protecting the Application

As systems scale and evolve, security must be integrated at every stage. From code to deployment, securing the entire application stack is a critical part of maintaining a resilient and reliable system.

🔹 Key responsibilities in the security layer:

Static and Dynamic Code Analysis: Using tools like SonarQube and OWASP ZAP to identify vulnerabilities early in the development cycle.

API Security: Securing microservices communication using OAuth2, JWT, and mTLS for encrypted, authenticated communication between services.

Secret Management: Using tools like Vault or AWS Secrets Manager to securely manage credentials and sensitive data used by the application.

Network Security: Implementing firewalls, WAFs (Web Application Firewalls), and securing APIs to ensure only authorized access to services.

💡 Key takeaway: Security is not an afterthought — it should be baked into every layer, from code to deployment, to ensure the system is protected against threats at all times.

6. Architecture and Design Layer: Building for the Future

As applications grow, it’s essential to design systems that are not only functional today but can also scale and evolve as needed in the future. Microservices architecture and cloud-native technologies are key to ensuring long-term adaptability.

Kubernetes Enhancements

🔹 Key responsibilities in the architecture layer:

Scalability: Designing systems that can automatically scale using Kubernetes Horizontal Pod Autoscalers (HPA), ensuring performance during traffic spikes.

Resilience: Implementing strategies for fault tolerance, including circuit breakers, retry logic, and health checks for microservices to handle failures gracefully.

Multi-Cloud Readiness: Building systems that can be easily migrated or deployed across different cloud platforms, avoiding vendor lock-in.

API Gateways: Using tools like NGINX or Kong to manage ingress traffic, routing, and authentication for microservices.

💡 Key takeaway: Designing for the future involves building a flexible and scalable architecture that can adapt to changing requirements while ensuring the system remains robust and fault-tolerant.

The Bigger Picture: Building a Resilient, Scalable System

Every layer — from build to architecture — plays a vital role in the success of an enterprise-grade application. Each part feeds into the next, creating an ecosystem where:

🔹 Developers write maintainable, testable, and scalable code.

🔹 CI/CD pipelines automate the process of building and deploying quickly and securely.

🔹 Kubernetes orchestrates the deployment and scaling of microservices.

🔹 Observability tools help monitor and diagnose production systems.

🔹 Security measures protect the entire stack from vulnerabilities.

🔹 Architects design systems for long-term success and adaptability.

When all these layers come together, the result is a scalable, resilient, and adaptable application that can grow and evolve with the business.

Final Thoughts: Are You Seeing the Whole Picture?

Whether you’re building, deploying, or maintaining an application, it’s easy to get focused on just one part of the process. But for systems to truly thrive at scale, we need to think beyond just code, CI/CD, or Kubernetes. We must consider every layer that contributes to the application lifecycle.

Next time you work on a project, ask yourself: “Am I optimizing the entire journey?”

What challenges have you faced in building, deploying, or managing scalable applications? Let’s discuss! 🚀

If this journey resonates with you or if you’ve tackled similar challenges, let’s connect and exchange ideas!

🔔 Follow me: LinkedIn